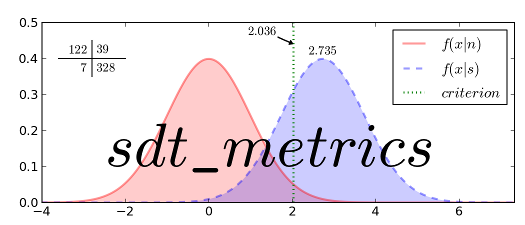

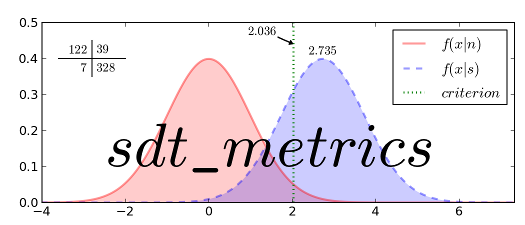

A class for storing signal detection data

Class is intended to be used a datastructure for keeping track of signal detection data. It also supports the the general functions of sdt_metrics.

Add counts from two SDTs.

Intersection is the minimum of corresponding counts.

adds event to object

D = SDT() D(HI) <==> D.update([HI]) <==> D[HI]+=1

x.__cmp__(y) <==> cmp(x,y)

x.__delattr__(‘name’) <==> del x.name

Like dict.__delitem__() but does not raise KeyError for missing values.

x.__eq__(y) <==> x==y

default object formatter

x.__ge__(y) <==> x>=y

x.__getattribute__(‘name’) <==> x.name

x.__getitem__(y) <==> x[y]

x.__gt__(y) <==> x>y

Create a new, empty SDT object. And if given, count elements from an input iterable. Or, initialize the count from another mapping of elements to their counts.

iterates over event types

x.__le__(y) <==> x<=y

x.__len__() <==> len(x)

x.__lt__(y) <==> x<y

The count of elements not in the SDT is zero.

x.__ne__(y) <==> x!=y

Union is the maximum of value in either of the input SDTs.

helper for pickle

x.__setattr__(‘name’, value) <==> x.name = value

sdt.__setitem(key) <==> sdt[key]

x.__str__() <==> str(x)

Subtract count, but keep only results with positive counts.

Abstract classes can override this to customize issubclass().

This is invoked early on by abc.ABCMeta.__subclasscheck__(). It should return True, False or NotImplemented. If it returns NotImplemented, the normal algorithm is used. Otherwise, it overrides the normal algorithm (and the outcome is cached).

list of weak references to the object (if defined)

accuracy: (1.+p(HI) - p(FA)) / 2.

A: Zhang and Mueller’s ROC-Based Measure of Sensitivity

Smith [1]_ remedied a common confusion with A’ a suggested an improved measure A’’ (not implemented). Zhang and Mueller [2]_ found that Smith had a mathematical error and properly formulated a new nonparametric measure of sensitivity. They called this measure A. Here it is called amzs() in honor of Mueller, Zhang and Smith.

See also

| [1] | Zhang, J., and Mueller, S. T. (2005). A note on roc analysis and non-parametric estimate of sensitivity. Psychometrika, 70, 145-154. |

| [2] | Smith, W. D. (1995). Clarification of Sensitivity Measure A’. Journal of Mathematical Psychology 39, 82-89. |

A’: Non-parametric measure of sensitivity

Devised by Pollack and Norman [1]_ but was reformalated and popularized by Grier [2]_. Smith [3]_ provides a nice historical overview, literature review, and analysis of A’.

See also

| [1] | Pollack, I., Norman, D. A. (1964). A non-parametric analysis of recognition experiemnts. Psychonomic Sicence 1, 125-126. |

| [2] | Grier, J. B. (1971). Nonparametric indexes for sensitivity and bias: Computing formulas. Psychological Bulletin, 75, 424-429. |

| [3] | Smith, W. D. (1995). Clarification of Sensitivity Measure A’. Journal of Mathematical Psychology 39, 82-89. |

B: 0.5*p(HI) + 0.5p(FA)

Bar-Napkin measure of Response Bias. Implemented for comparitive purposes.

beta: classic parametric measure of response bias [1]_.

Use of c() (or loglinear_c()) as a parametric measure of response bias may be preferred.

This funciton applies Macmillan and Kaplan [2]_ correction to extreme values.

See also

| [1] | Green, D. M., and Swets J. A. (1996/1988). Signal Detection theory and psychophysics, reprint edition. Los Altos, CA: Penisula Publishing. |

| [2] | Macmillan, N. A., and Kaplan, H. L. (1985). Detection theory analysis of group data: Estimating sensitivity from average hit and false-alarm rates. Psychological Bulletin, 98, 185-199. |

b: Zhang and Mueller’s [1]_ measure of decision bias

| [1] | Zhang, J., and Mueller, S. T. (2005). A note on roc analysis and non-parametric estimate of sensitivity. Psychometrika, 70, 145-154. |

B’H: nonparametric measure of response bias

Developed by Hodos [1]_. See, Warm, Dember, and Howe, [2]_ compared several nonparmetric measures of response bias and found bppd to be empirically better.

See also

| [1] | Hodos, W. (1970). A nonparametric index of response bias for use in detection and recognition experiments. Psychological Bulletin, 74, 351-354. |

| [2] | See, J. E., Warm, J. S., Dember, W. N., and Howe, S. R. (1997). Vigilance and signal detection theory: An empirical evaluation of five measures of response bias. |

b’‘: Grier [1]_ nonparametric measure of response bias

See also

| [1] | Grier, J. B. (1971). Nonparametric indexes for sensitivity and bias: Computing formulas. Psychological Bulletin, 75, 424-429. |

B’‘d: nonparametric measure of response bias

First developed by Donaldson [1]_. See, Warm, Dember, and Howe, [2]_ compared several nonparmetric measures of response bias and endorse this measure when the assumption of a gaussian noise distribution does not hold.

See also

| [1] | Donaldson, W. (1992). Measuring recognition memory. Journal of Experimental Psychology: General, 121, 275277. |

| [2] | See, J. E., Warm, J. S., Dember, W. N., and Howe, S. R. (1997). Vigilance and signal detection theory: An empirical evaluation of five measures of response bias. |

c: parametric measure of response bias

Generally recommended as a better measure than beta [1]_, [2]_, [3]_. First reason being that d’ and c are independent [4]_. Forumula from Macmillan [5]_. Applies Macmillan and Kaplan correction to extreme values. [6]_.

See also

| [1] | Banks W. P. (1970). Signal detection theory and human memory. Psychological Bulletin, 74, 81-99. |

| [2] | Macmillan, N. A., and Creelman, C. D. (1990). Response bias: Characteristics of detection theory, threshold theory, and nonparametric indexes. Psychological Bulletin, 107, 401-413. |

| [3] | Snodgrass, J. G., and Corwin, J. (1988). Pragmatics of measuring recognition memory: Applications to dementia and amnesia. Journal of Experimental Psychology: General, 117, 34-50. |

| [4] | Ingham, J. G. (1970). Individual differences in signal detection. Acta Psychologica, 34, 39-50. |

| [5] | Macmillan, N. A. (1993). Signal detection theory as data analysis method and psychological decision model. In G. Keren and C. Lewis (Eds.), A handbook for data analysis in the behavioral sciences: Methodological issues (pp. 21-57). Hillsdale, NJ: Erlbaum. |

| [6] | Macmillan, N. A., and Kaplan, H. L. (1985). Detection theory analysis of group data: Estimating sensitivity from average hit and false-alarm rates. Psychological Bulletin, 98, 185-199. |

Return a shallow copy.

returns count of events

d’: parametric measure of sensitivity

Extremely popular measure adapted by from communication engineering by psychologists in the 1950s [1]_. Most notable text is Green and Swets [2]_. Calculation uses the formula given by Macmillan [3]_. Extreme probabilities of 0 and 1 are treated using the correction suggested by Macmillan and Kaplan [4]_. Rates of 0 are replaced with 1/(2n) and rates of 1 are replaced wtih 1 - 1/(2n). This approach has been shown to be biased (Miller [5]_). Hautus’s [6]_ loglinear approach may be preferred. For a more extensive overview on treating extreme values see Stanislaw and Todorov [7].

See also

| [1] | Szalma, J. L., and Hancock, P. A. Signal detection theory. Class Lecture Notes. http://bit.ly/KIyKkt |

| [2] | Green, D. M., and Swets J. A. (1996/1988). Signal Detection theory and psychophysics, reprint edition. Los Altos, CA: Penisula Publishing. |

| [3] | Macmillan, N. A. (1993). Signal detection theory as data analysis method and psychological decision model. In G. Keren & C. Lewis (Eds.), A handbook for data analysis in the behavioral sciences: Methodological issues (pp. 21-57). Hillsdale, NJ: Erlbaum. |

| [4] | Macmillan, N. A., and Kaplan, H. L. (1985). Detection theory analysis of group data: Estimating sensitivity from average hit and false-alarm rates. Psychological Bulletin, 98, 185-199. |

| [5] | Miller, J. (1996). The sampling distribution of d’. Perception and Psychophysics, 58, 65-72. |

| [6] | Hautus, M. (1995). Corrections for extreme proportions and their biasing effects on estimated values of d’. Behavior Research Methods, Instruments, and Computers, 27, 46-51. |

| [7] | Stanislaw H. and Todorov N. (1999). Calculation of signal detection theory measures. Behavorial Research Methods, Instruments, and Computers, 31 (1), 137-149. |

f1: a.k.a. F1-Score, F-measure

The harmonic mean of precision and recall [1]_

Note

This metric cannot be calculated from probabilities

| [1] | van Rijsbergen, C. J. (1979). Information Retrieval (2nd ed.). Butterworth. http://bit.ly/8yAjR |

false discovery rate: FP / (TP + FP)

Note

This metric cannot be calculated from probabilities

returns list of event type count pairs

returns list of event types

beta: classic parametric measure of response bias [1]_.

Use of c() (or loglinear_c()) as a parametric measure of response bias may be preferred.

Applies Hautus’s [2]_ loglinear transformation to counts before calculating beta. (Transformation is applied to all values)

Note

This metric cannot be calculated from probabilities

See also

| [1] | Green, D. M., and Swets J. A. (1996/1988). Signal Detection theory and psychophysics, reprint edition. Los Altos, CA: Penisula Publishing. |

| [2] | Hautus, M. (1995). Corrections for extreme proportions and their biasing effects on estimated values of d’. Behavior Research Methods, Instruments, and Computers, 27, 46-51. |

loglinear B’‘d: nonparametric measure of response bias

Corrects boundary problems with bppd. by applying Hautus’s [1]_ loglinear transformation to counts before calculating bppd. For more information see the Analysis of loglinear_bppd

Note

This metric cannot be calculated from probabilities

See also

| [1] | Hautus, M. (1995). Corrections for extreme proportions and their biasing effects on estimated values of d’. Behavior Research Methods, Instruments, and Computers, 27, 46-51. |

c: parametric measure of response bias

Applies Hautus’s [1]_ loglinear transformation to counts before calculating beta. (Transformation is applied to all values)

Note

This metric cannot be calculated from probabilities

See also

| [1] | Hautus, M. (1995). Corrections for extreme proportions and their biasing effects on estimated values of d’. Behavior Research Methods, Instruments, and Computers, 27, 46-51. |

loglinear d’: parametric measure of sensitivity

Applies Hautus’s [1]_ loglinear transformation to counts before calculating dprime. (Transformation is applied to all values)

Note

This metric cannot be calculated from probabilities

See also

| [1] | Hautus, M. (1995). Corrections for extreme proportions and their biasing effects on estimated values of d’. Behavior Research Methods, Instruments, and Computers, 27, 46-51. |

Matthews [1]_ correlation coefficient

| [1] | Matthews, B.W. (1975). Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim. Biophys. Acta, 405, 442-451. |

mutual information

Alternative metric to compare classifiers suggested by Wallach [1]_ and discussed by Murphy [2]_.

Note

This metric cannot be calculated from probabilities

Consider the following confusion matrices for classifiers A, B, and C. The prevalance rate is 90%.

A B C

---------- ---------- ----------

1 0 1 0 1 0

--+----------+----------+----------+----------+

1 | 90 10 | 80 0 | 78 0 | HI MI |

0 | 0 0 | 10 10 | 12 10 | FA CR |

--+----------+----------+----------+----------+

The above classifiers yield:

Measure A B C d’ 0.000 2.576 2.462 Accuracy 0.900 0.900 0.880 Precision 0.900 1.000 1.000 Recall 1.000 0.888 0.867 F-score 0.947 0.941 0.929 Mutual information 0.000 0.187 0.174 Intuition suggests B > C > A but only d’ and mutual information reflect this relationship. Mutual information is slightly more sensitive ([1]_ and [2]_ do discuss d’).

| [1] | Wallach. H. (2006). Evaluation metrics for hard classi?ers. Technical report, Cavendish Lab.,Univ. Cambridge. |

| [2] | Murphy, K. P. (2007). Performance evaluation of binary classifiers. http://bit.ly/LzD5m0 |

negative predictive value: TN / (TN + FN)

Note

This metric cannot be calculated from probabilities

returns probability of event type

If key is not found, d is returned if given, otherwise KeyError is raised

2-tuple; but raise KeyError if D is empty.

positive predictive value: TP / (TP + FP)

Note

This metric cannot be calculated from probabilities

sdt.precision() <==> sdt.ppv()

Note

This metric cannot be calculated from probabilities

sdt.precision() <==> sdt.ppv()

Note

This metric cannot be calculated from probabilities

sdt.recall() <==> sdt.sensitivity()

sensitivity: TP / (TP + FN)

Note

This metric cannot be calculated from probabilities

sdt.recall() <==> sdt.sensitivity()

specificity: TN / (TN + FP)

Note

This metric cannot be calculated from probabilities

Like dict.update() but subtracts counts instead of replacing them. Counts can be reduced below zero. Both the inputs and outputs are allowed to contain zero and negative counts.

Source can be an iterable, a dictionary, or another SDT instance.

Like dict.update() but add counts instead of replacing them.

Source can be an iterable, a dictionary, or another SDT instance.