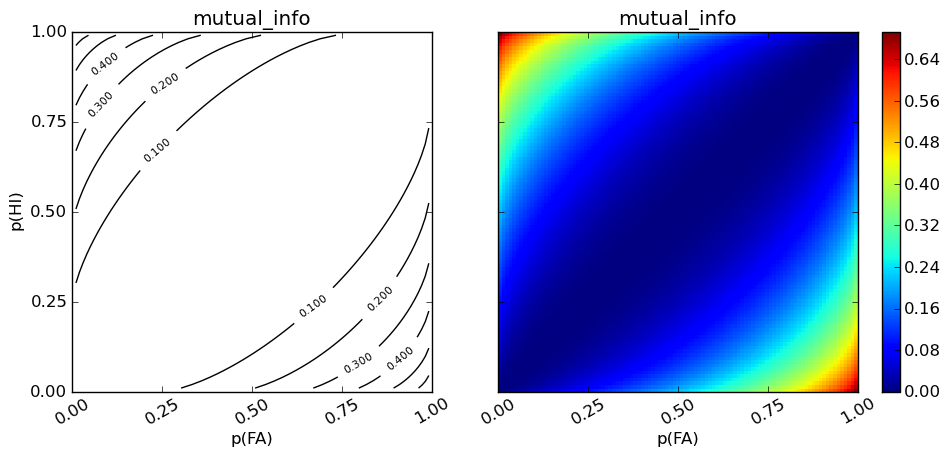

mutual information

Alternative metric to compare classifiers suggested by Wallach [1] and discussed by Murphy [2].

Note

This metric cannot be calculated from probabilities

Consider the following confusion matrices for classifiers A, B, and C. The prevalance rate is 90%.

A B C ---------- ---------- ---------- 1 0 1 0 1 0 --+----------+----------+----------+----------+ 1 | 90 10 | 80 0 | 78 0 | HI MI | 0 | 0 0 | 10 10 | 12 10 | FA CR | --+----------+----------+----------+----------+The above classifiers yield:

Measure A B C d’ 0.000 2.576 2.462 Accuracy 0.900 0.900 0.880 Precision 0.900 1.000 1.000 Recall 1.000 0.888 0.867 F-score 0.947 0.941 0.929 Mutual information 0.000 0.187 0.174 Intuition suggests B > C > A but only d’ and mutual information reflect this relationship. Mutual information is slightly more sensitive ([1] and [2] do discuss d’).

[1] (1, 2) Wallach. H. (2006). Evaluation metrics for hard classi?ers. Technical report, Cavendish Lab.,Univ. Cambridge.

[2] (1, 2) Murphy, K. P. (2007). Performance evaluation of binary classifiers. http://bit.ly/LzD5m0

Calculates metric based on hit, miss, correct rejection, and false alarm counts

based on the number of args and the availability of .prob routes call to appropriate method.